Search results

Integration Testing using Spring Boot, Postgres and Docker

Spring Cloud Series

Subscribe to my newsletter to receive updates when content like this is published.

- Developing Microservices using Spring Boot, Jersey, Swagger and Docker

- Integration Testing using Spring Boot, Postgres and Docker (you are here)

- Services registration and discovery using Spring Cloud Netflix Eureka Server and client-side load-balancing using Ribbon and Feign

- Centralized and versioned configuration using Spring Cloud Config Server and Git

- Routing requests and dynamically refreshing routes using Spring Cloud Zuul Server

- Microservices Sidecar pattern implementation using Postgres, Spring Cloud Netflix and Docker

- Implementing Circuit Breaker using Hystrix, Dashboard using Spring Cloud Turbine Server (work in progress)

1. OVERVIEW

The integration testing goal is to verify that interaction between different parts of the system work well.

Consider this simple example:

...

public class ActorDaoIT {

@Autowired

private ActorDao actorDao;

@Test

public void shouldHave200Actors() {

Assert.assertThat(this.actorDao.count(), Matchers.equalTo(200L));

}

@Test

public void shouldCreateAnActor() {

Actor actor = new Actor();

actor.setFirstName("First");

actor.setLastName("Last");

actor.setLastUpdate(new Date());

Actor created = this.actorDao.save(actor);

...

}

...

}Successful run of this integration test validates that:

- Class attribute actorDao is found in the dependency injection container.

- If there are multiple implementations of ActorDao interface, the dependency injection container is able to sort out which one to use.

- Credentials needed to communicate with the backend database are correct.

- Actor class attributes are correctly mapped to database column names.

- actor table has exactly 200 rows.

This trivial integration test is taking care of possible issues that unit testing wouldn’t be able to find. It comes at a cost though, a backend database needs to be up and running. If resources used by the integration test also includes a message broker or text-based search engine, instances of such services would need to be running and reachable. As can be seen, extra effort is needed to provision and maintain VMs / Servers / … for integration tests to interact with.

In this blog entry, a continuation of the Spring Cloud Series, I’ll show and explain how to implement integration testing using Spring Boot, Postgres and Docker to pull Docker images, start container(s), run the DAOs-related tests using one or multiple Docker containers and dispose them once the tests are completed.

2. REQUIREMENTS

- Java 7+.

- Maven 3.2+.

- Familiarity with Spring Framework.

- Docker host.

3. THE DOCKER IMAGES

I’m going to start by building a couple of Docker images, first a base Postgres Docker image then a DVD rental DB Docker image that extends from the base image and integration tests will connect to once a container is started.

3.1. BASE POSTGRES DOCKER IMAGE

This image extends the official Postgres image included in Docker hub and attempts to create a database passing environmental variables to the run command.

Here is a snippet from its Dockerfile:

...

ENV DB_NAME dbName

ENV DB_USER dbUser

ENV DB_PASSWD dbPassword

RUN mkdir -p /docker-entrypoint-initdb.d

ADD scripts/db-init.sh /docker-entrypoint-initdb.d/

RUN chmod 755 /docker-entrypoint-initdb.d/db-init.sh

...Shell or SQL files included in /docker-entrypoint-initdb.d directory will be automatically run during container startup. That leads to the execution of db-init.sh:

#!/bin/bash

echo "Verifying DB $DB_NAME presence ..."

result=`psql -v ON_ERROR_STOP=on -U "$POSTGRES_USER" -d postgres -t -c "SELECT 1 FROM pg_database WHERE datname='$DB_NAME';" | xargs`

if [[ $result == "1" ]]; then

echo "$DB_NAME DB already exists"

else

echo "$DB_NAME DB does not exist, creating it ..."

echo "Verifying role $DB_USER presence ..."

result=`psql -v ON_ERROR_STOP=on -U "$POSTGRES_USER" -d postgres -t -c "SELECT 1 FROM pg_roles WHERE rolname='$DB_USER';" | xargs`

if [[ $result == "1" ]]; then

echo "$DB_USER role already exists"

else

echo "$DB_USER role does not exist, creating it ..."

psql -v ON_ERROR_STOP=on -U "$POSTGRES_USER" <<-EOSQL

CREATE ROLE $DB_USER WITH LOGIN ENCRYPTED PASSWORD '${DB_PASSWD}';

EOSQL

echo "$DB_USER role successfully created"

fi

psql -v ON_ERROR_STOP=on -U "$POSTGRES_USER" <<-EOSQL

CREATE DATABASE $DB_NAME WITH OWNER $DB_USER TEMPLATE template0 ENCODING 'UTF8';

GRANT ALL PRIVILEGES ON DATABASE $DB_NAME TO $DB_USER;

EOSQL

result=$?

if [[ $result == "0" ]]; then

echo "$DB_NAME DB successfully created"

else

echo "$DB_NAME DB could not be created"

fi

fiThis script basically takes care of creating a Postgres role and a database and grants database permissions to newly created user in case they don’t exist. Database and role information is passed through environment variables as shown next:

docker run -d -e POSTGRES_USER=postgres -e POSTGRES_PASSWORD=postgres -e DB_NAME=db_dvdrental -e DB_USER=user_dvdrental -e DB_PASSWD=changeit asimio/postgres:latestNow that we have a Postgres image with the database and role used to connect to it already created, there are a couple of options on how to create and setup the database schema:

- The 1st option would be, no other Docker image is needed since we already have a database and credentials and the integration test itself (during its life cycle) would take care of setting the schema up, let’s say by using a combination of Spring’s SqlScriptsTestExecutionListener and @Sql annotation.

- The 2nd option would be to provide an image with the database schema already setup, meaning the database already includes tables, views, triggers, functions as well as the seeded data.

No matter which option is chosen, It is my opinion that integration tests:

- Should not impose tests execution order.

- Application external resources should closely match the resources used in production environment. (DB servers, Message Brokers, Search engines, …)

- Should start from a known state, meaning it would be preferable for every test to have the same seeded data, this being a result of meeting bullet #1.

This is not a one size fits all solution, it should depends on particular needs, if for instance, the database used by the application is an in-memory product, it might be faster to use the same container and drop-create the tables before each test starts instead of starting a new container for every test.

I decided to go with the 2nd option, creating a Docker image with the schema and seeded data setup. In this example each integration test is going to start a new container, where the schema doesn’t need to be created and data, which might be a lot of it, doesn’t need to be seeded during container startup. The next section covers this option.

3.2. DVD RENTAL DB POSTGRES DOCKER IMAGE

Pagila, is a DVD rental Postgres database ported from MySQL’s Sakila. It’s the database used to run the integration tests discussed in this blog post.

Let’s take a look at relevant commands of its Dockerfile:

...

VOLUME /tmp

RUN mkdir -p /tmp/data/db_dvdrental

ENV DB_NAME db_dvdrental

ENV DB_USER user_dvdrental

ENV DB_PASSWD changeit

COPY sql/dvdrental.tar /tmp/data/db_dvdrental/dvdrental.tar

# Seems scripts will get executed in alphabetical-sorted order, db-init.sh needs to be executed first

ADD scripts/db-restore.sh /docker-entrypoint-initdb.d/

RUN chmod 755 /docker-entrypoint-initdb.d/db-restore.sh

...It sets environment variables with DB name and credentials, includes a dump of the database and a script to restore DB from dump is copied to /docker-entrypoint-initdb.d directory, which as I mentioned earlier, will execute Shell and SQL scripts found in it.

The script to restore from a Postgres dump looks like:

#!/bin/bash

echo "Importing data into DB $DB_NAME"

pg_restore -U $POSTGRES_USER -d $DB_NAME /tmp/data/db_dvdrental/dvdrental.tar

echo "$DB_NAME DB restored from backup"

echo "Granting permissions in DB '$DB_NAME' to role '$DB_USER'."

psql -v ON_ERROR_STOP=on -U $POSTGRES_USER -d $DB_NAME <<-EOSQL

GRANT SELECT, INSERT, UPDATE, DELETE ON ALL TABLES IN SCHEMA public TO $DB_USER;

GRANT USAGE, SELECT ON ALL SEQUENCES IN SCHEMA public TO $DB_USER;

EOSQL

echo "Permissions granted"Dockerfile is located and run: docker build -t asimio/postgres:latest .

Then go to where asimio/db_dvdrental’s

Dockerfile is found and run: docker build -t asimio/db_dvdrental:latest .

4. GENERATING JPA ENTITIES FROM DATABASE SCHEMA

I’ll use a maven plugin to generate the JPA entities, they don’t include @Id generation strategy, but it’s a very good starting point that saves a lot of time to manually create the POJOs. Relevant section in pom.xml looks like:

...

<properties>

...

<postgresql.version>9.4-1206-jdbc42</postgresql.version>

...

</properties>

...

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>hibernate3-maven-plugin</artifactId>

<version>2.2</version>

<configuration>

<components>

<component>

<name>hbm2java</name>

<implementation>jdbcconfiguration</implementation>

<outputDirectory>target/generated-sources/hibernate3</outputDirectory>

</component>

</components>

<componentProperties>

<revengfile>src/main/resources/reveng/db_dvdrental.reveng.xml</revengfile>

<propertyfile>src/main/resources/reveng/db_dvdrental.hibernate.properties</propertyfile>

<packagename>com.asimio.dvdrental.model</packagename>

<jdk5>true</jdk5>

<ejb3>true</ejb3>

</componentProperties>

</configuration>

<dependencies>

<dependency>

<groupId>cglib</groupId>

<artifactId>cglib-nodep</artifactId>

<version>2.2.2</version>

</dependency>

<dependency>

<groupId>org.postgresql</groupId>

<artifactId>postgresql</artifactId>

<version>${postgresql.version}</version>

</dependency>

</dependencies>

</plugin>

...9.4-1206-jdbc41 if you are using Java 7.The plugin configuration references db_dvdrental.reveng.xml, which includes the schema we would like to use for the reverse engineering task of generating the POJOs:

...

<hibernate-reverse-engineering>

<schema-selection match-schema="public" />

</hibernate-reverse-engineering>And it also references db_dvdrental.hibernate.properties which includes the JDBC connection properties to connect to the database to read the schema from:

hibernate.connection.driver_class=org.postgresql.Driver

hibernate.connection.url=jdbc:postgresql://${docker.host}:5432/db_dvdrental

hibernate.connection.username=user_dvdrental

hibernate.connection.password=changeitAt this point all we need is start a Docker container with db_dvdrental DB setup and run a Maven command to generate the POJOs. To start the container just run this command:

docker run -d -p 5432:5432 -e POSTGRES_USER=postgres -e POSTGRES_PASSWORD=postgres -e DB_NAME=db_dvdrental -e DB_USER=user_dvdrental -e DB_PASSWD=changeit asimio/db_dvdrental:latestIn case DOCKER_HOST is set in your environment run the following Maven command as is, otherwise hard-code docker.host to the IP where the Docker host could be found:

mvn hibernate3:hbm2java -Ddocker.host=`echo $DOCKER_HOST | sed "s/^tcp:\/\///" | sed "s/:.*$//"`The JPA entities should have been generated in target/generated-sources/hibernate3. The resulting package would have to be copied to src/main/java.

5. TESTEXECUTIONLISTENER SUPPORTING CODE

A custom Spring TestExecutionListener implementation hooks into the integration test life cycle to provision Docker containers before it gets executed. This is a snippet of such implementation:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

...

public class DockerizedTestExecutionListener extends AbstractTestExecutionListener {

...

private DockerClient docker;

private Set<String> containerIds = Sets.newConcurrentHashSet();

@Override

public void beforeTestClass(TestContext testContext) throws Exception {

final DockerConfig dockerConfig = (DockerConfig) TestContextUtil.getClassAnnotationConfiguration(testContext, DockerConfig.class);

this.validateDockerConfig(dockerConfig);

final String image = dockerConfig.image();

this.docker = this.createDockerClient(dockerConfig);

LOG.debug("Pulling image '{}' from Docker registry ...", image);

this.docker.pull(image);

LOG.debug("Completed pulling image '{}' from Docker registry", image);

if (DockerConfig.ContainerStartMode.ONCE == dockerConfig.startMode()) {

this.startContainer(testContext);

}

super.beforeTestClass(testContext);

}

@Override

public void prepareTestInstance(TestContext testContext) throws Exception {

final DockerConfig dockerConfig = (DockerConfig) TestContextUtil.getClassAnnotationConfiguration(testContext, DockerConfig.class);

if (DockerConfig.ContainerStartMode.FOR_EACH_TEST == dockerConfig.startMode()) {

this.startContainer(testContext);

}

super.prepareTestInstance(testContext);

}

@Override

public void afterTestClass(TestContext testContext) throws Exception {

try {

super.afterTestClass(testContext);

for (String containerId : this.containerIds) {

LOG.debug("Stopping container: {}, timeout to kill: {}", containerId, DEFAULT_STOP_WAIT_BEFORE_KILLING_CONTAINER_IN_SECONDS);

this.docker.stopContainer(containerId, DEFAULT_STOP_WAIT_BEFORE_KILLING_CONTAINER_IN_SECONDS);

LOG.debug("Removing container: {}", containerId);

this.docker.removeContainer(containerId, RemoveContainerParam.forceKill());

}

} finally {

LOG.debug("Final cleanup");

IOUtils.closeQuietly(this.docker);

}

}

...

private void startContainer(TestContext testContext) throws Exception {

LOG.debug("Starting docker container in prepareTestInstance() to make System properties available to Spring context ...");

final DockerConfig dockerConfig = (DockerConfig) TestContextUtil.getClassAnnotationConfiguration(testContext, DockerConfig.class);

final String image = dockerConfig.image();

// Bind container ports to automatically allocated available host ports

final int[] containerToHostRandomPorts = dockerConfig.containerToHostRandomPorts();

final Map<String, List<PortBinding>> portBindings = this.bindContainerToHostRandomPorts(this.docker, containerToHostRandomPorts);

// Creates container with exposed ports, makes host ports available to outside

final HostConfig hostConfig = HostConfig.builder().

portBindings(portBindings).

publishAllPorts(true).

build();

final ContainerConfig containerConfig = ContainerConfig.builder().

hostConfig(hostConfig).

image(image).

build();

LOG.debug("Creating container for image: {}", image);

final ContainerCreation creation = this.docker.createContainer(containerConfig);

final String id = creation.id();

LOG.debug("Created container [image={}, containerId={}]", image, id);

// Stores container Id to remove it for later removal

this.containerIds.add(id);

// Starts container

this.docker.startContainer(id);

LOG.debug("Started container: {}", id);

Set<String> hostPorts = Sets.newHashSet();

// Sets published host ports to system properties so that test method can connect through it

final ContainerInfo info = this.docker.inspectContainer(id);

final Map<String, List<PortBinding>> infoPorts = info.networkSettings().ports();

for (int port : containerToHostRandomPorts) {

final String hostPort = infoPorts.get(String.format("%s/tcp", port)).iterator().next().hostPort();

hostPorts.add(hostPort);

final String hostToContainerPortMapPropName = String.format(HOST_PORT_SYS_PROPERTY_NAME_PATTERN, port);

System.getProperties().put(hostToContainerPortMapPropName, hostPort);

LOG.debug(String.format("Mapped ports host=%s to container=%s via System property=%s", hostPort, port, hostToContainerPortMapPropName));

}

// Makes sure ports are LISTENing before giving running test

if (dockerConfig.waitForPorts()) {

LOG.debug("Waiting for host ports [{}] ...", StringUtils.join(hostPorts, ", "));

final Collection<Integer> intHostPorts = Collections2.transform(hostPorts,

new Function<String, Integer>() {

@Override

public Integer apply(String arg) {

return Integer.valueOf(arg);

}

}

);

NetworkUtil.waitForPort(this.docker.getHost(), intHostPorts, DEFAULT_PORT_WAIT_TIMEOUT_IN_MILLIS);

LOG.debug("All ports are now listening");

}

}

...

}

This class methods beforeTestClass(), prepareTestInstance() and afterTestClass() are used to manage the Docker containers life cycle:

- beforeTestClass(): gets executed only once, before the first test. Its purpose is to pull the Docker image and depending on whether the test class will re-use the same running container or not, it might also start a container.

- prepareTestInstance(): gets called before the next test method is run. Its purpose is to start a new container depending on if each test method requires it, otherwise the same running container started in beforeTestClass() will be re-used.

- afterTestClass(): gets executed only once, after all tests have been executed. Its purpose is to stop and remove running containers.

Why aren’t I implementing this functionality in beforeTestMethod() and afterTestMethod() TestExecutionListener’s methods if judging by their names seem more suitable? The problem with these methods rely on how I’m passing information back to Spring for loading the application context.

To prevent hard-coding any port mapped from the Docker container to the Docker host, I decided to use random ports, for instance, in the demo, I’m using a Postgres container that internally listens on port 5432, but it needs to be mapped to a host port for other applications to connect to the database, this host port is chosen randomly and put in the JVM System properties as shown line 90. It might end up with a System property like:

HOST_PORT_FOR_5432=32769and that lead us to the next section.

6. ACTORDAOIT INTEGRATION TEST CLASS

ActorDaoIT is not that complex, let’s take a look at it:

...

@RunWith(SpringJUnit4ClassRunner.class)

@SpringApplicationConfiguration(classes = SpringbootITApplication.class)

@TestExecutionListeners({

DockerizedTestExecutionListener.class,

DependencyInjectionTestExecutionListener.class,

DirtiesContextTestExecutionListener.class

})

@DockerConfig(image = "asimio/db_dvdrental:latest",

containerToHostRandomPorts = { 5432 }, waitForPorts = true, startMode = ContainerStartMode.FOR_EACH_TEST,

registry = @RegistryConfig(email="", host="", userName="", passwd="")

)

@DirtiesContext(classMode = ClassMode.AFTER_EACH_TEST_METHOD)

@ActiveProfiles(profiles = { "test" })

public class ActorDaoIT {

@Autowired

private ActorDao actorDao;

@Test

public void shouldHave200Actors_1() {

Assert.assertThat(this.actorDao.count(), Matchers.equalTo(200L));

}

...

}The interesting parts are @TestExecutionListeners, @DockerConfig and @DirtiesContext annotations used for configuration.

- DockerizedTestExecutionListener discussed earlier is configured through @DockerConfig with information about the Docker image, its name and tag, where will it be pulled from and container ports that will be exposed.

- DependencyInjectionTestExecutionListener is used so that actorDao is injected and available for the test to run.

- DirtiesContextTestExecutionListener is used with @DirtiesContext annotation to cause the Spring to reload the application context after each test in the class is executed.

The reason for reloading the application context, as done in this demo, is because the JDBC url changes depending on the Docker host mapped container ports discussed at the end of the previous section. Lets look at the properties file used to build the data source bean:

1

2

3

4

5

6

7

8

9

10

11

12

---

spring:

profiles: test

database:

driverClassName: org.postgresql.Driver

datasource:

url: jdbc:postgresql://${docker.host}:${HOST_PORT_FOR_5432}/db_dvdrental

username: user_dvdrental

password: changeit

jpa:

database: POSTGRESQL

generate-ddl: false

Noticed HOST_PORT_FOR_5432 placeholder? After the DockerizedTestExecutionListener starts the Postgres DB Docker container, it adds a System property named HOST_PORT_FOR_5432 with a random value. When is time for the Spring JUnit runner to load the application context, it successfully replaces the placeholder found in the yaml file with the available property value. This only happens because the Docker life cycle is managed in DockerizedTestExecutionListener’s beforeTestClass() and prepareTestInstance(), where the application context hasn’t loaded yet, as is the case with beforeTestMethod().

If I were to use the same running container for each test, there wouldn’t be any need to reload the application context and DirtiesContext-related listener and annotation could be removed and @DockerConfig’s startMode could be set to ContainerStartMode.ONCE so that Docker container is started only once through DockerizedTestExecutionListener’s beforeTestClass().

Now that actorDao bean is created and available, each individual integration test executes as usual.

There is still another placeholder in the yaml file, docker.host will be addressed in the next sections.

7. CONFIGURING maven-failsafe-plugin

Following naming convention, integration test was named using IT as suffix, for instance, ActorDaoIT, this means maven-surefire-plugin won’t execute it during the test phase, so maven-failsafe-plugin was used instead. Relevant section from pom.xml includes:

<properties>

...

<maven-failsafe-plugin.version>2.19.1</maven-failsafe-plugin.version>

...

</properties>

...

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-failsafe-plugin</artifactId>

<version>${maven-failsafe-plugin.version}</version>

<executions>

<execution>

<goals>

<goal>integration-test</goal>

<goal>verify</goal>

</goals>

</execution>

</executions>

</plugin>

...8. RUNNING FROM COMMAND LINE

In addition to JAVA_HOME, M2_HOME, PATH environment variables, there are a few more that need to be set (in ~/.bashrc for instance) since they are used by Spotify’s docker-client in DockerizedTestExecutionListener.

export DOCKER_HOST=172.16.69.133:2376

export DOCKER_MACHINE_NAME=osxdocker

export DOCKER_TLS_VERIFY=1

export DOCKER_CERT_PATH=~/.docker/machine/certsOnce these variables have been sourced, demo could be built and tested using:

mvn verify -Ddocker.host=`echo $DOCKER_HOST | sed "s/^tcp:\/\///" | sed "s/:.*$//"`docker.host is being passed as VM argument (the DOCKER_HOST IP only) and replaced when Spring JUnit runner creates the application context for each test.

9. RUNNING FROM ECLIPSE

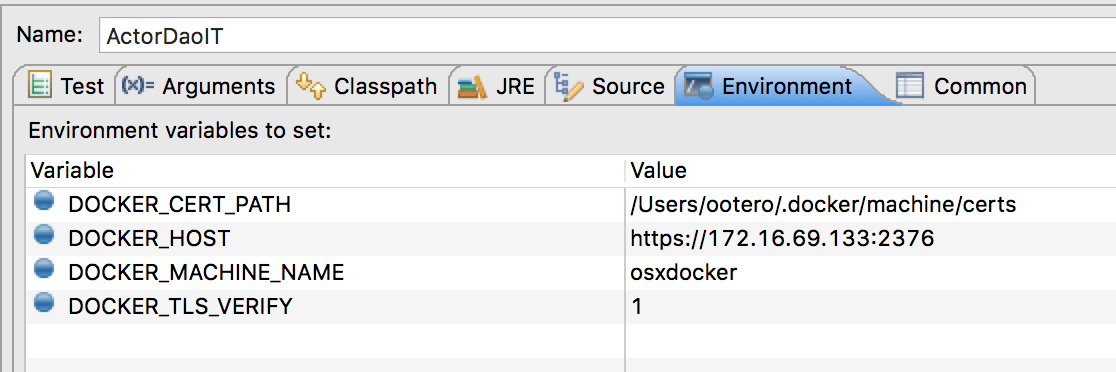

As in previous section, DOCKER_* environment variables and docker.host VM argument need to be passed to the test class in Eclipse, the way to accomplish so is to set them in the Run Configurations dialog -> Environment tab:

Run Configurations - Environment

Run Configurations - Environment

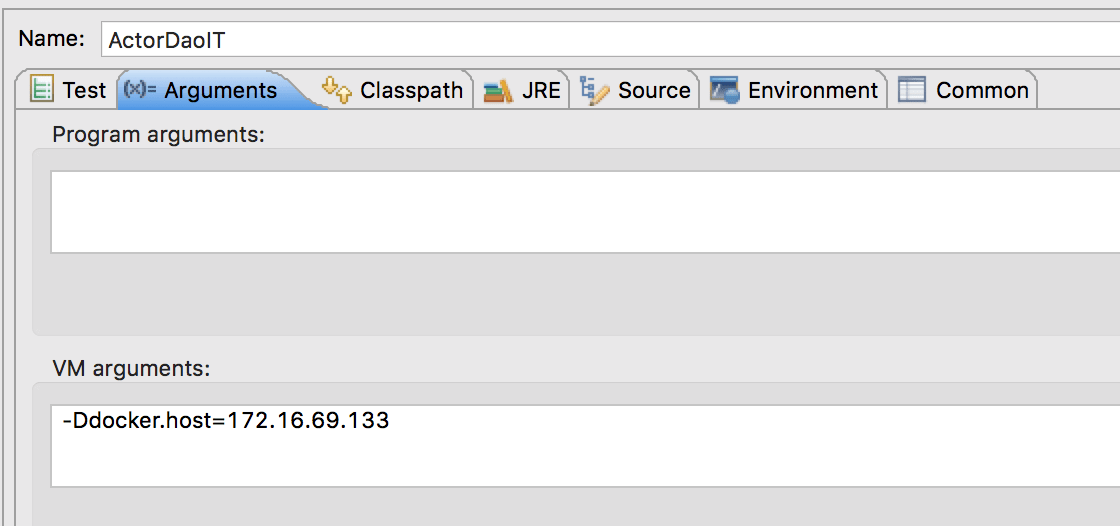

and Run Configurations dialog -> Arguments tab

Run Configurations - Arguments

Run Configurations - Arguments

and run the JUnit test as usual.

Thanks for reading and as always, feedback is very much appreciated. If you found this post helpful and would like to receive updates when content like this gets published, sign up to the newsletter.

10. SOURCE CODE

Accompanying source code for this blog post can be found at:

- Postgres base and db_dvdrental DB Docker images repos

- Integration Testing Spring Boot Postgres Docker source code

11. REFERENCES

NEED HELP?

I provide Consulting Services.ABOUT THE AUTHOR