Search results

Troubleshooting Spring's RestTemplate Requests Timeout

1. OVERVIEW

Spring RestTemplate is one of the options to make client HTTP requests to endpoints, it facilitates communication with the HTTP servers, handles the connections and transforms the XML, JSON, … request / response payloads to / from POJOs via HttpMessageConverter.

By default RestTemplate doesn’t use a connection pool to send requests to a server, it uses a SimpleClientHttpRequestFactory that wraps a standard JDK’s HttpURLConnection taking care of opening and closing the connection.

But what if an application needs to send a large number of requests to a server? Wouldn’t it be a waste of efforts to open and close a connection for each request sent to the same host? Wouldn’t it make sense to use a connection pool the same way a JDBC connection pool is used to interact with a DB or a thread pool is used to execute tasks concurrently?

In this post I’ll cover configuring RestTemplate to use a connection pool using a pooled-implementation of the ClientHttpRequestFactory interface, run a load test using JMeter, troubleshoot requests timeout and reconfigure the connection pool.

2. REQUIREMENTS

- Java 7+.

- Maven 3.2+.

- Familiarity with Spring Framework.

3. CREATE THE DEMO SERVICE 1

curl "https://start.spring.io/starter.tgz" -d bootVersion=1.4.2.RELEASE -d dependencies=actuator,web -d language=java -d type=maven-project -d baseDir=resttemplate-troubleshooting-svc-1 -d groupId=com.asimio.demo.api -d artifactId=resttemplate-troubleshooting-svc-1 -d version=0-SNAPSHOT | tar -xzvf -This command will create a Maven project in a folder named resttemplate-troubleshooting-svc-1 with most of the dependencies used in the accompanying source code.

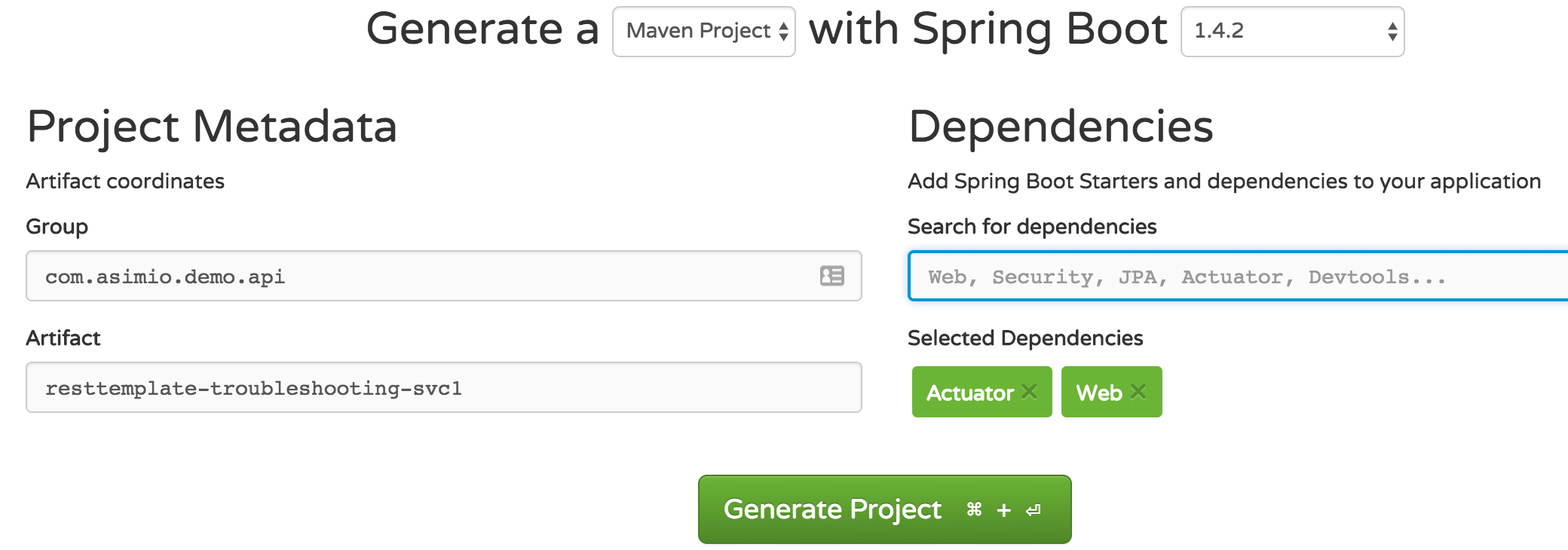

Alternatively, it can also be generated using Spring Initializr tool then selecting Actuator and Web dependencies as shown below:

Spring Initializr - Generate Spring Boot App - Actuator, Web

Spring Initializr - Generate Spring Boot App - Actuator, Web

ResttemplateTroubleshootingSvc1Application.java, the entry point to the application looks like:

package com.asimio.api.demo.main;

...

@SpringBootApplication(scanBasePackages = { "com.asimio.api.demo.rest" })

public class ResttemplateTroubleshootingSvc1Application {

public static void main(String[] args) {

SpringApplication.run(ResttemplateTroubleshootingSvc1Application.class, args);

}

}It also scans the com.asimio.api.demo.rest package which includes the DemoResource class:

package com.asimio.api.demo.rest;

...

@RestController

@RequestMapping(value = "/demo")

public class DemoResource {

@RequestMapping(method = RequestMethod.GET)

public String getDemo() {

try {

Thread.sleep(150);

} catch (InterruptedException e) {

e.printStackTrace();

}

return "demo";

}

}which exposes a single endpoint /demo whose implementation delays the response for 150ms in an attempt to simulate some computation.

4. CREATE THE DEMO SERVICE 2

curl "https://start.spring.io/starter.tgz" -d bootVersion=1.4.2.RELEASE -d dependencies=actuator,web -d language=java -d type=maven-project -d baseDir=resttemplate-troubleshooting-svc-2 -d groupId=com.asimio.demo.api -d artifactId=resttemplate-troubleshooting-svc-2 -d version=0-SNAPSHOT | tar -xzvf -Similarly to Create the Demo Service 1, this command will create a Maven project in a folder named resttemplate-troubleshooting-svc-2 and alternatively, it can also be generated using Spring Initializr tool then selecting Actuator and Web dependencies.

ResttemplateTroubleshootingSvc2Application.java, the entry point to this service looks like:

...

@SpringBootApplication(scanBasePackages = { "com.asimio.api.demo.main", "com.asimio.api.demo.rest" })

public class ResttemplateTroubleshootingSvc2Application {

...

@Bean

public PoolingHttpClientConnectionManager poolingHttpClientConnectionManager() {

PoolingHttpClientConnectionManager result = new PoolingHttpClientConnectionManager();

result.setMaxTotal(20);

return result;

}

@Bean

public RequestConfig requestConfig() {

RequestConfig result = RequestConfig.custom()

.setConnectionRequestTimeout(2000)

.setConnectTimeout(2000)

.setSocketTimeout(2000)

.build();

return result;

}

@Bean

public CloseableHttpClient httpClient(PoolingHttpClientConnectionManager poolingHttpClientConnectionManager, RequestConfig requestConfig) {

CloseableHttpClient result = HttpClientBuilder

.create()

.setConnectionManager(poolingHttpClientConnectionManager)

.setDefaultRequestConfig(requestConfig)

.build();

return result;

}

@Bean

public RestTemplate restTemplate(HttpClient httpClient) {

HttpComponentsClientHttpRequestFactory requestFactory = new HttpComponentsClientHttpRequestFactory();

requestFactory.setHttpClient(httpClient);

return new RestTemplate(requestFactory);

}

public static void main(String[] args) {

SpringApplication.run(ResttemplateTroubleshootingSvc2Application.class, args);

}

}It configures a restTemplate bean with its ClientHttpRequestFactory set to HttpComponentsClientHttpRequestFactory, an implementation based on Apache HttpComponents HttpClient to replace the default implementation based on the JDK. This request factory is configured with an HttpClient with timeout-related properties (a better practice would have been to set those via a config file such as application.yml) and a pooling connection manager with a maximum number of connections set to 20.

It also scans the com.asimio.api.demo.rest package which includes another DemoResource class:

package com.asimio.api.demo.rest;

...

@RestController

@RequestMapping(value = "/delegate")

public class DemoResource {

private RestTemplate restTemplate;

@RequestMapping(path = "/demo", method = RequestMethod.GET)

public String getDemoDelegate() {

return this.restTemplate.getForObject("http://localhost:8800/demo", String.class);

}

@Autowired

public void setRestTemplate(RestTemplate restTemplate) {

this.restTemplate = restTemplate;

}

}exposing a single endpoint /delegate/demo whose implementation delegates the requests to Demo Service 1’s /demo using the injected RestTemplate discussed earlier.

locahost:8800 was hard-coded to simplify this tutorial but a better approach is to either set the location of Demo Service 1 using a configuration property in application.yml or through Microservices Registration and Discovery using Spring Cloud.

5. RUNNING BOTH SERVICES

As said earlier, to keep this tutorial simple, Demo Service 2 delegates requests to Demo Service 1 via locahost:8800 so lets start Demo Service 1 on 8800:

cd <path to service 1>/resttemplate-troubleshooting-svc-1/

mvn spring-boot:run -Dserver.port=8800and Demo Service 2 on 8900:

cd <path to service 2>/resttemplate-troubleshooting-svc-2

mvn spring-boot:run -Dserver.port=8900Verifying both services work as expected:

curl -v http://localhost:8800/demo

* Trying ::1...

* TCP_NODELAY set

* Connected to localhost (::1) port 8800 (#0)

> GET /demo HTTP/1.1

> Host: localhost:8800

> User-Agent: curl/7.51.0

> Accept: */*

>

< HTTP/1.1 200

< X-Application-Context: application:8800

< Content-Type: text/plain;charset=UTF-8

< Content-Length: 4

< Date: Tue, 27 Dec 2016 18:59:10 GMT

<

* Curl_http_done: called premature == 0

* Connection #0 to host localhost left intact

demoand

curl -v http://localhost:8900/delegate/demo

* Trying ::1...

* TCP_NODELAY set

* Connected to localhost (::1) port 8900 (#0)

> GET /delegate/demo HTTP/1.1

> Host: localhost:8900

> User-Agent: curl/7.51.0

> Accept: */*

>

< HTTP/1.1 200

< X-Application-Context: application:8900

< Content-Type: text/plain;charset=UTF-8

< Content-Length: 4

< Date: Tue, 27 Dec 2016 18:59:48 GMT

<

* Curl_http_done: called premature == 0

* Connection #0 to host localhost left intact

demo6. LOAD-TESTING USING JMETER

I have included a JMeter script to run a load test againt Demo Service 2, it could be found in resttemplate-troubleshooting-svc-2/src/test/resources/jmeter/loadTest.jmx of the accompanying source code section of this blog post.

Basically it will load-test 60 simultaneous thread / users with a ramp-up period of 10 minutes and the same thread will sleep for 1 second before being re-used for another request. This will change on a case by case basis but these are the settings I chose for this tutorial.

Just 11 minutes into the load issues started to be noticed, Demo Service 2 is failing to send requests to Demo Service 1 because it cannot get a connection from the pool.

...

2016-12-20 16:26:02 INFO DispatcherServlet:508 - FrameworkServlet 'dispatcherServlet': initialization completed in 24 ms

2016-12-20 16:35:43 ERROR [dispatcherServlet]:181 - Servlet.service() for servlet [dispatcherServlet] in context with path [] threw exception [Request processing failed; nested exception is org.springframework.web.client.ResourceAccessException: I/O error on GET request for "http://localhost:8800/demo": Timeout waiting for connection from pool; nested exception is org.apache.http.conn.ConnectionPoolTimeoutException: Timeout waiting for connection from pool] with root cause

org.apache.http.conn.ConnectionPoolTimeoutException: Timeout waiting for connection from pool

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager.leaseConnection(PoolingHttpClientConnectionManager.java:286) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.conn.PoolingHttpClientConnectionManager$1.get(PoolingHttpClientConnectionManager.java:263) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.execchain.MainClientExec.execute(MainClientExec.java:190) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.execchain.ProtocolExec.execute(ProtocolExec.java:184) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.execchain.RetryExec.execute(RetryExec.java:88) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.execchain.RedirectExec.execute(RedirectExec.java:110) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.client.InternalHttpClient.doExecute(InternalHttpClient.java:184) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:82) ~[httpclient-4.5.2.jar:4.5.2]

at org.apache.http.impl.client.CloseableHttpClient.execute(CloseableHttpClient.java:55) ~[httpclient-4.5.2.jar:4.5.2]

at org.springframework.http.client.HttpComponentsClientHttpRequest.executeInternal(HttpComponentsClientHttpRequest.java:89) ~[spring-web-4.3.4.RELEASE.jar:4.3.4.RELEASE]

...

at org.apache.coyote.AbstractProtocol$ConnectionHandler.process(AbstractProtocol.java:802) [tomcat-embed-core-8.5.6.jar:8.5.6]

at org.apache.tomcat.util.net.NioEndpoint$SocketProcessor.doRun(NioEndpoint.java:1410) [tomcat-embed-core-8.5.6.jar:8.5.6]

at org.apache.tomcat.util.net.SocketProcessorBase.run(SocketProcessorBase.java:49) [tomcat-embed-core-8.5.6.jar:8.5.6]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1142) [?:1.8.0_73]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:617) [?:1.8.0_73]

at org.apache.tomcat.util.threads.TaskThread$WrappingRunnable.run(TaskThread.java:61) [tomcat-embed-core-8.5.6.jar:8.5.6]

at java.lang.Thread.run(Thread.java:745) [?:1.8.0_73]

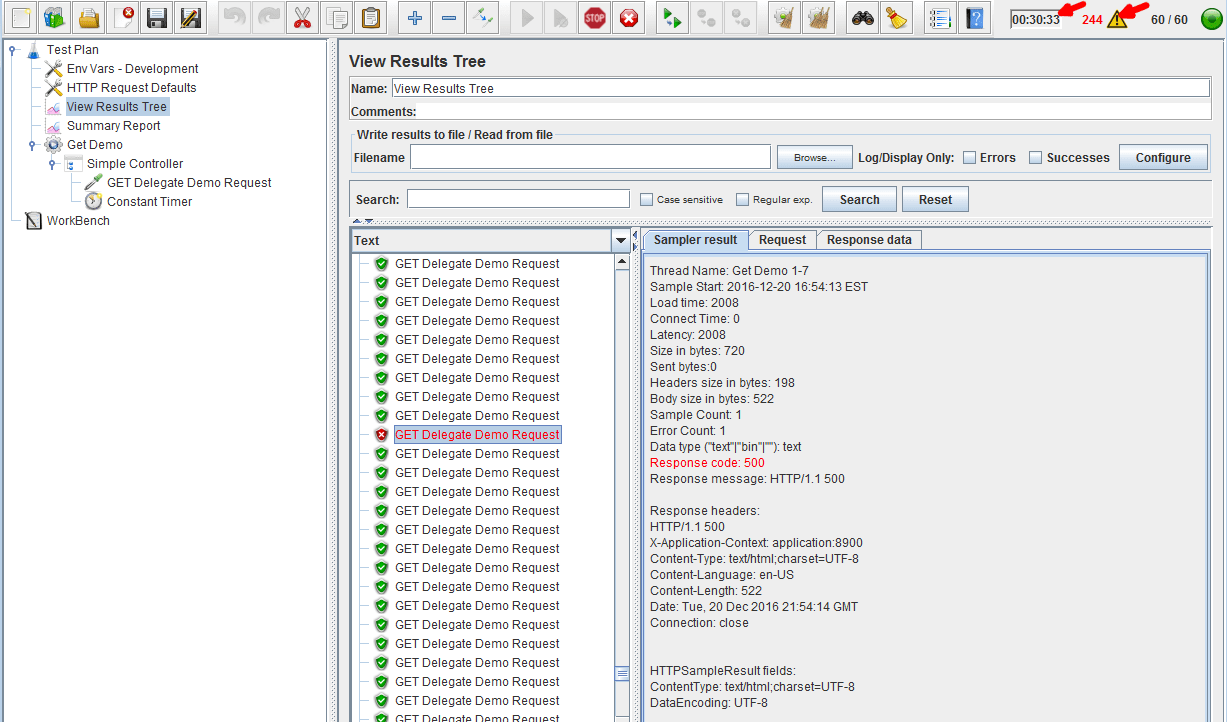

...JMeter shows HTTP status 500 for such requests:

JMeter Load Test RestTemplate Connection Pool - Error

JMeter Load Test RestTemplate Connection Pool - Error

How is this happening? Moving from an opening / closing connection approach to re-use connections to save time while instantiating the sockets now results in requests timing out, isn’t a pool of 20 connections enough? Attempts to increase maxTotal from 20 to a higher value won’t help, lets take a look at this command output:

netstat -an | grep 8800

TCP 0.0.0.0:8800 0.0.0.0:0 LISTENING

TCP 127.0.0.1:8800 127.0.0.1:61127 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:61145 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:61147 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:61153 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:61155 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:61198 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:61204 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:61205 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:61208 ESTABLISHED

TCP 127.0.0.1:61126 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61127 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61131 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61134 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61135 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61137 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61143 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61145 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61146 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61147 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61150 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61152 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61153 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61155 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61163 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61165 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61166 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61168 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61178 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61183 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61186 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61190 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:61198 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:61204 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:61205 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:61208 127.0.0.1:8800 ESTABLISHED

TCP [::]:8800 [::]:0 LISTENINGSeems that even though the pool was set to a a maximum of 20 connections it’s only using 4 for such a load.

This is an real life issue we were facing when running a load test for one of the services during my time at Disney that my buddy Tim Brizard (@brizardofoz) fixed.

7. ADJUSTING PoolingHttpClientConnectionManager SETTINGS

When defining poolingHttpClientConnectionManager bean in Create Demo Service 2’s entry point, neither defaultMaxPerRoute nor maxPerRoute were set and the implementation of PoolingHttpClientConnectionManager uses a maximum of 4 connections per host route if not specified. Lets increase that number by first editing Demo Service 2’s application.yml:

...

httpConnPool:

maxTotal: 20

defaultMaxPerRoute: 20

maxPerRoutes:

-

scheme: http

host: localhost

port: 8800

maxPerRoute: 20

...and set it during poolingHttpClientConnectionManager bean creation in ResttemplateTroubleshootingSvc2Application.java:

...

@Autowired

private HttpHostsConfiguration httpHostConfiguration;

...

@Bean

public PoolingHttpClientConnectionManager poolingHttpClientConnectionManager() {

PoolingHttpClientConnectionManager result = new PoolingHttpClientConnectionManager();

result.setMaxTotal(this.httpHostConfiguration.getMaxTotal());

// Default max per route is used in case it's not set for a specific route

result.setDefaultMaxPerRoute(this.httpHostConfiguration.getDefaultMaxPerRoute());

// and / or

if (CollectionUtils.isNotEmpty(this.httpHostConfiguration.getMaxPerRoutes())) {

for (HttpHostConfiguration httpHostConfig : this.httpHostConfiguration.getMaxPerRoutes()) {

HttpHost host = new HttpHost(httpHostConfig.getHost(), httpHostConfig.getPort(), httpHostConfig.getScheme());

// Max per route for a specific host route

result.setMaxPerRoute(new HttpRoute(host), httpHostConfig.getMaxPerRoute());

}

}

return result;

}

...HttpHostsConfiguration.java

package com.asimio.api.demo.main;

...

@Configuration

@ConfigurationProperties(prefix = "httpConnPool")

public class HttpHostsConfiguration {

private Integer maxTotal;

private Integer defaultMaxPerRoute;

private List<HttpHostConfiguration> maxPerRoutes;

// Getters, Setters

public static class HttpHostConfiguration {

private String scheme;

private String host;

private Integer port;

private Integer maxPerRoute;

// Getters, Setters

}

}This is overriding the defaultMaxPerRoute value used by PoolingHttpClientConnectionManager to 20 (as configured in httpConnPool.defaultMaxPerRoute property) and setting the maximum connection per route for the route constructed from [scheme=http, host=localhost, port=8800] to 20.

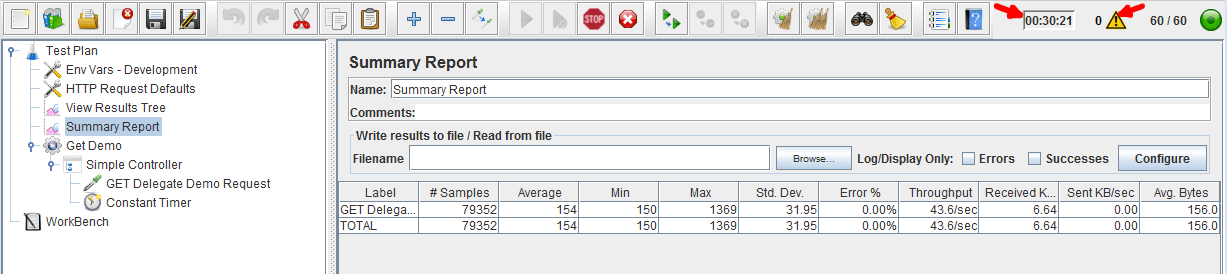

Lets repeat the same load test:

JMeter Load Test RestTemplate Connection Pool - Success

JMeter Load Test RestTemplate Connection Pool - Success

After 30 minutes into the load, no error has been logged in the standard error or reported in JMeter.

Let’s now run the netstat command:

netstat -an | grep 8800

TCP 0.0.0.0:8800 0.0.0.0:0 LISTENING

TCP 127.0.0.1:8800 127.0.0.1:53107 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:53285 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:53430 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:53555 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:53782 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54006 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54241 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54308 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54331 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54419 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54543 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54689 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54753 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:54937 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:55003 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55020 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55076 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55078 TIME_WAIT

TCP 127.0.0.1:8800 127.0.0.1:55132 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55153 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55155 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55316 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55318 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55344 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55359 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55383 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55416 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55438 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55443 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55499 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55625 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55627 ESTABLISHED

TCP 127.0.0.1:8800 127.0.0.1:55687 ESTABLISHED

TCP 127.0.0.1:53066 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53067 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53205 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53263 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53265 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53305 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53430 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53431 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53446 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53448 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53555 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53570 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53585 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53612 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53614 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53616 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53617 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53633 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53634 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53636 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53669 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53765 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53782 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53813 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53848 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:53865 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54075 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54099 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54170 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54172 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54263 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54265 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54310 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54331 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54333 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54460 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54534 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54545 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54681 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54689 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54691 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54733 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54827 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54868 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54885 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54887 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54902 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54904 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54935 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:54937 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:55003 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55020 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55076 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55078 127.0.0.1:8800 TIME_WAIT

TCP 127.0.0.1:55132 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55153 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55155 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55316 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55318 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55344 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55359 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55383 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55416 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55438 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55443 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55499 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55625 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55627 127.0.0.1:8800 ESTABLISHED

TCP 127.0.0.1:55687 127.0.0.1:8800 ESTABLISHED

TCP [::]:8800 [::]:0 LISTENINGIt can be seen more than 4 connections are now being used and the requests timeout problem has been fixed. Happy troubleshooting.

Thanks for reading and as always, feedback is very much appreciated. If you found this post helpful and would like to receive updates when content like this gets published, sign up to the newsletter.

8. SOURCE CODE

Accompanying source code for this blog post can be found at:

9. REFERENCES

- http://docs.spring.io/spring-framework/docs/current/javadoc-api/org/springframework/web/client/RestTemplate.html

- http://docs.spring.io/spring/docs/current/javadoc-api/org/springframework/http/client/SimpleClientHttpRequestFactory.html

- http://docs.spring.io/spring/docs/current/javadoc-api/org/springframework/http/client/HttpComponentsClientHttpRequestFactory.html

- http://www.baeldung.com/httpclient-connection-management

NEED HELP?

I provide Consulting Services.ABOUT THE AUTHOR